2025 Learning Log

In keeping up with my goal to do more learning this year, I’m allotting some time outside the daily grind for learning.

My overarching goal is to explore popular data engineering tools such as dbt, and cloud technologies such as Azure Fabric and Snowflake. I haven’t worked in Databricks since 2021 so it’ll be a good opportunity to re-learn and catch up with the new developments.

Here are some notes and impressions of how the learning is coming along so far, written in reverse chronological order.

I’ve moved some notes to separate posts

81: 2025-08-02 (Saturday) - Generative AI, Transformer

AI is the concept of programming a computer to react to data in a similar way as thinking beings. Generative AI allows the creation of new content from scratch.

While traditional programming inputs data and rules in order to come up with answers, machine learning uses data and answers in order to come up with rules. Supervised machine learning is a type of machine learning. It requires the input of labelled data in order to produce inferences about unlabelled objects. It is the most often used form of AI in the industry. Supervised machine learning is the foundation of LLMs (large language models).

Transformer models have two key concepts: attention, and encoder - decoder. Attention allows the model to predict the next words by focusing on key words. It involves tokenization (breaking down the sentence into words or sometimes pieces) and turn them into vector embeddings (mathematical representations of those words’ meanings). The embeddings are adjusted based on context derived from surrounding tokens. Encoder applies attention by looking at all parts of an input, and determining the most important features. Encoder distills a large input into context vectors. Decoder reverses this process. By using the context from the encoder, it is able to intelligently suggest new content.

Paper that introduced transformers to the world: “Attention is All You Need”

80: 2025-07-28 (Monday) - Query plan and optimization

There are different types of joins: Hash match (slowest), nested loop match (one small table, one large table – better for the large table to be indexed), and merge match (large tables, sorted on join).

Adding with recompile when creating stored procedures results in SQL server creating a new plan guide every time the stored proc runs (if not, it will use the plan guide it generated on its first run).

Use hints sparingly, only on necessary occasions.

Use parametrized queries instead of dynamic queries.

These options

1

2

3

4

5

6

7

8

SHOW STATISTICS IO ON

GO

SHOW STATISTICS TIME ON

GO

SET SHOWPLAN_ALL ON

GO

79: 2025-07-27 (Sunday) - Explanable AI, and AI Engineering

I attended a talk on explainable AI (XAI) – which looks to be similar to explainable models? In addition to Shapely (SHAP - which has been around for a while), I learned other algorithms like LIME (Local Interpretable Model agnostic Explanations), and ProtoPNet.

While I’m being a bit more studious, I also looked closer at AI Engineering - which builds on top of foundation models, so it’s closer to software engineering than Machine learning (AI developers).

Resources:

This book AI Engineering by Chip Huyen is a good reference. DeepLearning.ai also contains a range of courses on this topic.

78: 2025-07-08 (Tuesday) - Databricks

Topic: Delta Live Tables (DLT) Pipelines and Notebooks

78: 2025-07-07 (Monday) - Databricks

Topic: Delta Live Tables (DLT) Overview

77: 2025-07-04 (Friday) - Databricks

Topic: Delta Lake

76: 2025-07-03 (Thursday) - Databricks

Topic: Delta Lake

75: 2025-07-02 (Wednesday) - Databricks

Topic: Spark Structured Streaming

74: 2025-07-01 (Tuesday) - Databricks

Topic: ETL with Apache Spark - Transforming Data

73: 2025-06-30 (Monday) - Databricks

Topic: ETL with Apache Spark - Transforming Data

72: 2025-06-27 (Friday) - Databricks

Topic: ETL with Apache Spark - Querying Data

71: 2025-06-26 (Thursday) - Databricks

Topic: ETL with Apache Spark - Overview

70: 2025-06-25 (Wednesday) - Databricks

Topic: Introduction to Unity Catalog

69: 2025-06-24 (Tuesday) - Databricks

Topic: Databricks Workspace Components

68: 2025-06-23 (Monday) - Databricks

Topic: Introduction to Databricks Lakehouse Platform

I’ve started reviewing Databricks as it’s been a while, and there is now a free edition that is unattached to a cloud provider. I’ve following this course Databricks Certified Data Engineer Associate - Ultimate Prep.

67: 2025-06-13 (Friday) - Pre-commit framework

- framework for managing and maintaining pre-commit hooks

- CLI tool for managing various linter tools in Python (i.e. anything that does static code analysis)

- installs special scripts in the pre-commit hooks

Install the framework: pip install pre-commit

Use the framework (modifies the existing pre-commit hook file in the .git folder

pre-commit install- only checks against staged files excluding

.yamlfiles or config files

e.g. Generate a .pre-commit-config.yml file

1

pre-commit sample-config > .pre-commit-config.yaml

Use with darker if wanting to lint only within changed regions since a commit.

66: 2025-06-12 (Thursday) - Databricks Free Edition, git hooks

Databricks Free Edition

Databricks has released a Free Edition which includes full access to most features of the platform albeit limited to smaller compute and warehouse sizes, and other fair use limits. It does not require a credit card to sign up, and comes equipped with environment that enables data science, AI, and ML application development.

Git pre-commit hook

Git hooks scripts can be found in .git/hooks folder of the a git repository. Remove the .sample suffix to enable.

E.g. in file .git/hooks/pre-commit add

1

/bin/bash $GIT_DIR/check-code-quality.sh || exit -1

to run the given shell script every time git commit is called. $GIT_DIR points to the path of the git repository i.e. where the .git folder is located. If the variable is not set, use $PWD instead. Adding || exit -1 does not run the rest of the pre-commit script when the line fails

Skip executing the pre-commit script by adding --no-verify flag in git commit

65: 2025-06-11 (Wednesday) - Code complexity

Principle of least surprise

- aka Principle of Least Astonishment (POLA)

- software design guideline; design software systems in a way that minimizes confusion and unexpected behavior for users and developers

Metrics (imperfect) to spot complex code

- e.g. Cyclometric Complexity (CC) - the number of decisions in the code or the number of branching paths it has

Tools

- Radon

- flake8 plugin; set line in

.flake8file e.g.radon-max-cc = 10

- flake8 plugin; set line in

- McCabe

- integrated with flake8 by default

- Xenon

- based on Radon

1

radon cc code.py

Pick metrics that are intuitive (can be interpreted), metrics are useful but shouldn’t be pushed too far

64. 2025-06-10 (Tuesday) - Git, Github, Continuous Integration

Topic: git, Github, CI, Clean code (code quality)

Code quality questions for code review

- Does it work? -> automated testing

- Can I understand it? -> style

- Is it safe?

- Do we even want this? (no feature creep)

Integrate early, integrate often: PR/merge frequently with small, focused changes instead of a few giant changes.

Idea of: Pair or mob programming instead of code review to speed up production

Resources

63. 2025-06-05 (Thursday) - SQL Transactions

Pending separate post: What are transactions, implicit and explicit transactions, mark a transaction, TRANCOUNT, scope and type of locks, isolation levels

62. 2025-06-03 (Tuesday) - iTerm2, and OhMyZsh

Installed iTerm2 (finally), and OhMyZsh which is a plugin manager for zsh (z shell), and requires prior zsh installation

1

2

# install iTerm2

brew install --cask iterm2

To find out the default terminal shell: echo $SHELL

~/.zshrc file contains configuration for zsh. For theme, I’ve changed the default to “bira”.

Plugins available in OhMyZsh are in the folder: ~/.oh-my-zsh/plugins/ (ships with installation), and ~/.oh-my-zsh/custom/plugins (custom, or downloaded plugins)

To enable or disable plugins, update ~/.zshrc file, go to line plugins=(plugin_name plugin_name) e.g. plugins=(git web-search) enables both git and web-search plugins.

Notable plugins include:

e.g.

1

plugins=(git web-search python pyenv virtualenv pip zsh-autosuggestions zsh-syntax-highlighting)

To activate the changes, reload zsh by using the command zsh

Resources

61. 2025-06-02 (Monday) - Apache Spark

Topic: Spark Streaming, GraphX

60. 2025-05-26 (Monday) - Apache Spark

Topic: Spark ML

59. 2025-05-21 (Wednesday) - Apache Spark

Topic: Troubleshooting Spark

58. 2025-05-20 (Tuesday) - Apache Spark

Topic: Amazon EMR (Elastic Map Reduce), Partitioning

57. 2025-05-19 (Monday) - Apache Spark

Topic: Spark Connect

56. 2025-05-14 (Wednesday) - Apache Spark

Topic: Pandas on Spark, UDF and UDTF

55. 2025-05-13 (Tuesday) - Apache Spark

Topic: Spark SQL

54. 2025-05-07 (Wednesday) - Apache Spark

Topic: Spark SQL

53. 2025-05-06 (Tuesday) - Apache Spark

Topic: Map vs Flatmap, sorting

52. 2025-05-05 (Monday) - Apache Spark

Topic: Filtering RDDs, and summarizing min and max

51. 2025-04-29 (Tuesday) - Apache Spark

Topics: Spark 3.5 and Spark 4 features, Introduction to Spark, Introduction to RDDs

50. 2025-04-28 (Monday) - Apache Spark

Topic: Setting up and Getting Started

Here are the rest of the notes: Apache Spark

49. 2025-04-23 (Wednesday) - Migration essentials for Azure and AI workloads

Topic: Accelerate your migration, modernization, and innovation journey to Azure (link)

Cloud adoption framework - collection of documentation, implementation guidance, best practices, and tools from Microsoft to accelerate cloud adoption journey

48. 2025-04-22 (Tuesday) - Generative AI Solution Development

Topic: Assembling and Evaluating a RAG Application

This is the last section for the course, and so I’ve finished the course today 🎉

Steps

- Development

- doc/ data pipeline development loop

- chain development loop

- Expert/User testing

- staging deployment to web chat app

- Offline evaluation

- evaluation harness

- Production

- production deployment

- Monitoring and logging

- in all steps

MLflow, (including MLflow model registry) can facilitate development of RAG solutions

Evaluating RAG Pipeline - metrics

- retrieval related metrics

- context precision

- signal-to-noise ration for the retrieved context

- context relevancy

- measures relevancy of the retrieved context

- context recall

- measures the extent tp which all relevant entities and information are retrieved and mentioned in the context provided

- context precision

- generation related metrics

- faithfulness

- measures the factual accuracy of the generated answer in relation to the provided context

- answer relevancy

- assesses how pertinent and applicable the generated response is to the user’s initial query

- answer correctness

- measures the accuracy of the generated answer when compared to the ground truth

- faithfulness

47. 2025-04-21 (Monday) - Generative AI Solution Development

Topic: Vector Search

Vector Database

- a database optimized to store and retrieve high-dimensional vectors such as embeddings; can handle sparse data i.e. data with lots of zeros

- uses include RAG, recommendation engines, similarity search

Measures of vector similarity

- distance metrics

- similarity metrics

Vector search strategies (at scale)

- K-nearest neighbors (KNN)

- Approximate nearest neighbors (ANN)

- Hierarchical navigable small worlds (HNSW)

Reranking

- a method of prioritizing documents most relevant to user’s query

Mosaic AI vector search

- Databricks offering

- stores vector representation of data, plus metadata

46. 2025-04-15 (Tuesday) - Generative AI Solution Development

Topic: Preparing Data for RAG Solutions

Simple data prep process

- Ingest and pre-process external sources

- Chunk the texts

- Extract the embeddings from each chunk

- Save embeddings into vector store

Different ways to chunk data

- Context-aware chunking - chunk by sentence / paragraph / section

- Fixed-size chunking - divide by a specific number of tokens; simple and computationally cheap

- Experiment: Chunking by sentence, or chunking by multiple paragraphs

- Overlap chunks

- Windowed summarization - each chunk contains a windowed summary of previous few chunks; context-enriching

- Prior knowledge of user’s query patterns can be helpful; ensure that the semantics on the query side are going to match the semantics on the index side

- Summarization - sections of the documentations are summarized by LLMs and then embeddings are calculated from each summary

Tips on Embedding

- Choose the embedding model wisely. The embedding model should represent BOTH queries and documents

- Ensure similar embedding space for both queries and documents

45. 2025-04-14 (Monday) - Generative AI Solution Development

There is an on-going Databricks Event Virtual Learning Festival that features learning pathways in data analytics, data engineering, machine learning, and generative AI.

I checked out the Generative AI Engineering pathway since, out of all the topics, this is the one I’m least familiar with (having used Databricks previously for DA/DE/ML).

I’m currently on the first course Generative AI Development

Topic: From Prompt Engineering to RAG

Here are some interesting things I’ve learned:

Prompt Engineering Techniques

- Zero Shot / Few Shot programming

- not using / using an example

- Prompt Chaining

- tasks are broken into subtasks

- the output of one prompt serves as the input for the next

- Chain-of-Thought prompting

- guide the LLMs by prompting them to articulate their thought process step-by-step

Tips

- Prompts are model-specific; different models may require different prompts

- Format prompts; use delimiters, ask model to return structured output

- Guide the model for better responses

- Ask the model not to hallucinate: “Do not make things up if you do not know. Say ‘I do not have that information’.”

- Ask the model not to assume or probe sensitive information.

- Ask the model not to rush to a solution

RAG

- Retrieval augmented generation

- not to be confused with DAG (direct acyclic graph ) which, in data engineering, describes workflows or pipelines in orchestrations

- a pattern that can improve the efficacy of LLM applications by leveraging custom data

- done by retrieving data/documents relevant to a question or tasks and providing them as context to augment the prompts to an LLM so as to improve generation

44. 2025-04-11 (Friday) - Microsoft AI Skills Fest

Topics: Copilot AI Agent

I’ve viewed the topic here, which is also a Microsoft Learn course here. The repo for the activity / hands-on is also here.

My main takeaways are:

- Copilot Agent is really just a copilot. As a developer, you’d still need to know your stuff in order to steer the AI in the direction you want it. Case in point, the suggestions can make mistakes and/or the AI-generated code might not be ideal for production.

- It was fascinating to see how the agent can do self-healing: when an error is encountered, it’s able to diagnose what the error means and provide the reason(s) and/or workaround(s) in order to solve the error.

- Still, this Copilot AI Agent seems pretty great for scaffolding code which we know can get pretty repetitive.

I’ve also noticed that my own VS Code Copilot has the “Agent” feature already which means this is already generally available.

43. 2025-04-10 (Thursday) - Microsoft AI Skills Fest

Topics: Writing effective prompts, Working with AI responsibly

Continuing on this video from Microsoft Skills Fest: AI in Action: Unlock Productivity at work.

Also sharing my AI Skills Fest Festival Participation Badge.

Writing effective prompts

Best Practices:

- Be specific about what you want Copilot to do.

- Add some context to help Copilot understand what you’re asking.

- Provide some examples for Copilot to use.

- Let Copilot know how you want the response to be formatted.

The art of the prompt

- Goal: What do you want from Copilot

- Context: Why do you need it and who is involved?

- Source(s): What information or samples do you want Copilot to use?

- Expectations: How should Copilot respond to best fulfill your request?

Working with AI responsibly

Checklist

- Use clear, specific prompts to get relevant AI output

- Verify accuracy by cross-checking AI-generated content with trusted sources

- Watch for biases (gender, racial, socioeconomic) and adjust content as needed

- Edit AI-generated content to ensure clarity, fairness, and alignment with your goals.

- Respect privacy - avoid sharing sensitive or personal data with AI tools

- Be transparent - disclose AI-generated content in professional or public use

Watch for common biases:

- Gender bias. AI systems can perpetuate gender stereotypes if trained on biases date.

- Racial bias. AI systems can exhibit racial bias, leasing to discriminatory outcomes.

- Socioeconomic bias. AI systems can favor individuals from higher socioeconomic backgrounds.

- Confirmation bias. AI models learn from existing patterns, so if the data is skewed, AI may reinforce one-sided perspectives.

42. 2025-04-09 (Wednesday) - VS Code, Microsoft AI Skills Fest

Topic: VS Code Step debugging

Add a breakpoint in the code. Click “Run and Debug”

Step over runs the next line. Step in runs the line inside loops or functions, Step out runs the next line outside the current loop or function.

Topic: AI in Action: Unlock Productivity at Work

I’ve registered for Microsoft’s AI Skills Fest and viewed the session on how AI can be used to be more productive at work.

I learned about Copilot Agent that allows users to create basic AI agents without coding

41. 2025-04-03 (Thursday) - Apache Kafka

Topic: Controller, Producers, and Consumers

40. 2025-04-02 (Wednesday) - Apache Kafka

Topic: Spreading messages across partitions, partition Leader and Followers

39. 2025-03-31 (Monday) - Apache Kafka

Topics: Messages, Topics and partitions

38. 2025-03-27 (Thursday) - Apache Kafka

Topic: Kafka Topic

37. 2025-03-25 (Tuesday) - Apache Kafka

Topics: Apache Kafka, Broker, Zookeeper, Zookeeper ensemble multiple Kafka clusters, and default ports for Zookeeper, and broker

36. 2025-03-24 (Monday) - Apache Kafka

Topic: Producing and consuming messages

35. 2025-03-20 (Thursday) - Apache Kafka

I started learning Apache Kafka. I wanted to study Flink actually but since it comes downstream of Kafka, I figured I might as well learn a bit more about Kafka first.

Topics: Introduction to Kafka, installation and starting the server using Docker compose, and from binary.

34. 2025-03-19 (Wednesday) - Python

Topic: loguru

33. 2025-03-13 (Thursday) - Python

Topic: PyAutoGUI

32. 2025-03-12 (Wednesday) - Python

Topic: Python collections module

31. 2025-03-11 (Tuesday) - Python __main__, refactoring if-else statement, slice, any, guard clause, function currying

This statement if __name__ == "__main__" ensures that only the intended functions are run in the script, as importing a module also runs the module itself.

This if else statement

1

2

3

4

if x > 2:

print("b")

else:

print("a")

Can be written like this

1

print("b") if x > 2 else print("a")

I can’t agree that the latter is readable though (or I’m just not used to it)

Using any() on an iterable

1

2

numbers = [-1,-2-4,0,-3, -7]

has_positives = any(n > 0 for n in numbers)

Writing a Guard clause so that if a statement is not true, there’s no need to run the rest of the code

slice object

1

2

3

4

5

6

7

8

9

numbers: list[int] = list(range(1, 11))

text: str = "Hello, world!"

rev: slice = slice(None, None, -1)

f_five: slice = slice(None, 5)

print(numbers[rev]) # [10, 9, 8, 7, 6, 5, 4, 3, 2, 1]

print(text[rev]) # !dlrow, olleH

print(text[f_five]) # Hello

Currying creates specialized functions based on a general function

1

2

3

4

5

6

7

8

9

10

11

def multiply_setup(a: float) -> Callable:

def multiply(b: float) -> float:

return a * b

return multiply

double: Callable = multiply_setup(2)

triple: Callable = multiply_setup(3)

print(double(2)) # 4

print(triple(10)) # 30

Refs:

30. 2025-03-10 (Monday) - GraphQL

I was looking into resources for GraphQL and found these interesting bits

- Microsoft Fabric now has GraphQL API data access layer

- The official GraphQL learning resource GrapQL Learn

- A Python package to create GraphQL endpoints based on dataclasses Stawberry

Some terms:

- Schema - defines the structure of the data that can be returned

- Types - describes what data can be queried from the API

- Queries - used to retrieve data

- Mutations - used to modify data

- Subscriptions - used to retrieve real-time updates

29. 2025-03-06 (Thursday) - End of Snowflake course 🎉

Topics: Third party tools, and best practices

28. 2025-03-05 (Wednesday) - Snowflake

Topic: Snowflake Access Management

27. 2025-03-04 (Tuesday) - Snowflake

Topics: Materialized views, and data masking

26. 2025-03-03 (Monday) - Snowflake

Topic: Snowflake streams

25. 2025-02-27 (Thursday) - Snowflake

Topics: Data sampling, and tasks

24. 2025-02-26 (Wednesday) - Snowflake

Topic: Data sharing

23. 2025-02-25 (Tuesday) - Snowflake

Topics: Table types, Zero-copy cloning, Swapping

22. 2025-02-24 (Monday) - Snowflake

Topics: Time travel, and fail safe

21. 2025-02-21 (Friday) - Snowflake

Topic: Snowpipe

20. 2025-02-20 (Thursday) - Snowflake

Topics: Loading data from AWS, Azure, and GCP into Snowflake

19. 2025-02-19 (Wednesday) - Snowflake

Topics: Performance considerations, scaling up/down, scaling out, caching, and cluster keys

18. 2025-02-18 (Tuesday) - Snowflake

Topic: Loading unstructured data into Snowflake

17. 2025-02-17 (Monday) - Snowflake

Topics: Copy options, rejected records, load history

16. 2025-02-13 (Thursday) - Snowflake

Topics: COPY command, transformation, file format object

15. 2025-02-12 (Wednesday) - Snowflake

Topics: Editions, pricing and cost monitoring, roles

14. 2025-02-11 (Tuesday) - Snowflake

Taking a step towards my goals this year, I’ve started doing a deep-dive into Snowflake through learning about it in this course Snowflake Masterclass.

Topics: Setup, architecture overview, loading data

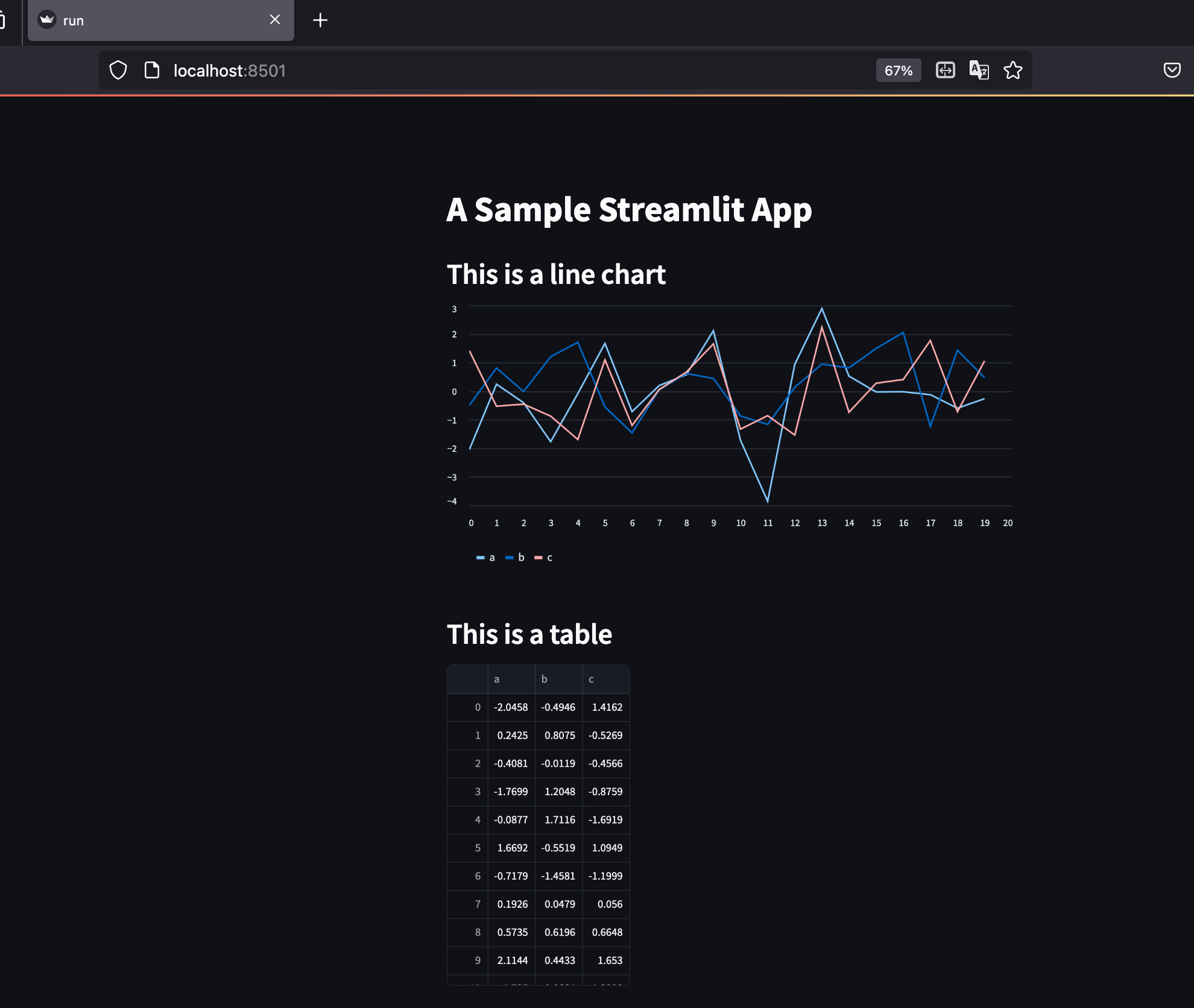

13. 2025-02-10 (Monday) - streamlit

I’ve checked out Streamlit, a Python library for creating web apps. Unlike other libraries or frameworks like Django or even Flask, Streamlit is able to spin up a web app fast using simple syntax. It is specially useful for data science and machine learning projects.

It is designed for quickly creating a data-driven web application. I’m not clear if it’s “production-quality” and opinions seem to be divided and depend on requirements or use-case.

e.g.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

import streamlit as st

import numpy as np

import pandas as pd

st.title('A Sample Streamlit App')

st.markdown('## This is a line chart')

chart_data = pd.DataFrame(

np.random.randn(20, 3),

columns=['a', 'b', 'c'])

st.line_chart(chart_data)

st.markdown('## This is a table')

st.dataframe(chart_data)

More features in the Docs

12. 2025-02-09 (Sunday) - ERD, Mermaid

Today, I reviewed ERDs and revisited Mermaid 🧜♀️

11. 2025-02-06 (Thursday) - docker, dbt-duckdb, Duckdb resources

docker

I wanted to check Docker 🐳 and see if I can try and create a container for a data pipeline. Well, that is the goal but since I don’t use Docker these days, I needed to reacquaint myself with it first.

These are some of the intro I found

- The intro to Docker I wish I had when I started

- Learn Docker in 7 Easy Steps - Full Beginner’s Tutorial

- Containerize Python Applications with Docker

an example of Dockerfile

1

2

3

4

FROM python:3.9

ADD main.py .

RUN pip install scikit-learn

CMD ["python", "./main.py"]

.dockerignore specifies the files or paths that are excluded when copying to the container

Ideally, there is only one process per container

docker-compose.yml - for running multiple containers at the same time

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

version: '3'

services:

web:

build: .

ports:

- "8080:8080"

db:

image: "mysql"

environment:

MYSQL_ROOT_PASSWORD: password

volumes:

- db-data:/foo

volumes:

db-data:

docker-compose up to run all the containers together

docker-compose down to shutdown all containers

Will revisit this topic in the succeeding days.

dbt-duckdb

Here is the repo for the adapter: dbt-duckdb

Installation should be

pip3 install dbt-duckdb

Duckdb resources

Putting these resouces here:

- Duckdb tutorial for beginners

- YT Channel @motherduckdb

I just realized that this feature solves some of my challenging tasks: Since Duckdb is able to read a CSV and execute SQL query on it, there’s no need to open a raw CSV just to check the sum of columns for example. The computation is in-memory too by default so no need to persist a database for quick analysis.

1

SELECT * FROM read_csv_auto('path/to/your/file.csv');

or just using the terminal

1

$ duckdb -c "SELECT * FROM read_parquet('path/to/your/file.parquet');"

10. 2025-02-05 (Wednesday) - dbt-Fabric, Fireducks

dbt-Fabric

I was looking around for how to integrate dbt to Azure, and I found these resouces

The process looks straight-forward

- Install the adapter in the virtual environment with Python 3.7 and up

pip install dbt-fabric - Make sure to have the Microsoft ODBC Driver for Sql Server installed

- Add an existing Fabric warehouse

- The dbt profile in the home directory needs to be setup

- Connect to the Azure warehouse, do the authentication

- Check the connections

- Then the dbt project can be built

Aside from Fabric, dbt also has integrations with other Azure data platforms:

- Synapse

- Data Factory

- SQL Server (SQL Server 2017, SQL Server 2019, SQL Server 2022 and Azure SQL Database)

Here is the docs for other dbt core platform connections

Fireducks

There’s another duck in the data space: Fireducks . It’s not related to DuckDB, instead it allows existing code using pandas to be more performant; it’s fully compatible with Pandas API.

This means there’s zero learning cost as all that’s needed is to replace pandas with fireducks and the code should be good to go

1

2

# import pandas as pd

import fireducks.pandas as pd

or via terminal (no need to change the import)

1

python3 -m fireducks.pandas main.py

Whereas for polars, the code would need to be rewritten; polars code is closer to PySpark.

e.g.

1

2

3

4

5

6

7

8

9

10

11

12

13

import pandas as pd

import polars as pl

from pyspark.sql.functions import col

# load the file ...

#

# Filter rows where 'column1' is greater than 10

# pandas

filtered_df = df[df['column1'] > 10]

# polars

filtered_df = df.filter(pl.col('column1') > 10

# PySpark

filtered_df = df.filter(col('column1') > 10)

Here’s a comparison of the performance: Pandas vs. FireDucks Performance Comparison. It surpassed both polars and pandas

9: 2025-02-04 (Tuesday) - Snowflake, Duckdb, Isaac Asimov Books

I’m taking a break from learning dbt, and switched focus to learning about databases: Snowflake, and Duckdb. Also, I took a bit of a break so that I can catch up on reading Isaac Asimov’s series.

Snowflake virtual warehouse

I found this free introducton in Udemy Snowflake Datawarehouse & Cloud Analytics - Introduction. This may not be the best resource out there though as it hasn’t been updated. However, I learned about provisioning a virtual warehouse in Snowflake with the following example commands

Virtual warehouse

- collection of compute resources (CPUs and allocated memory)

- needed to query data from snowflake, and load data into snowflake

- autoscaling is available in enterprise version but not in standard version

Create a virtual warehouse

1

2

3

4

5

6

7

8

CREATE WAREHOUSE TRAINING_WH

WITH

WAREHOUSE_SIZE = XSMALL --size

AUTO_SUSPEND = 60 -- if idle for 60s will be suspended automatically

AUTO_RESUME = TRUE -- if use executes a query, it will automatically resume on it's own without having to manually restarted the warehouse

INITIALLY_SUSPEND = TRUE

STATEMENT_QUEUED_TIMEOUT_IN_SECONDS = 300

STATEMENT_TIMEOUT_IN_SECONDS = 600;

Alternatively, use the user interface as well

Create database

1

2

3

CREATE DATABASE SALES_DB

DATA_RETENTION_TIME_IN_DAYS = 0

COMMENT = 'Ecommerce sales info';

Create schema

1

2

3

create schema Sales_Data;

create schema Sales_Views;

create schema Sales_Stage;

Use the warehouse

1

2

3

USE WAREHOUSE_TRAINING_WH;

USE DATABASE SALES_DB;

USE SCHEMA Sales_Data;

Command to find the current environment

1

SELECT CURRENT_DATABASE(), CURRENT_SCHEMA(), CURRENT_WAREHOUSE();

Here’s a demo of Snowflake features. I learned that Snowflake has it’s own data marketplace, and also has a dashboard feature.

Here are others resources for Snowflake

Duckdb overview

Another database that I’ve been hearing about is Duckdb, and I’m honestly very interested in this one as it is light-weight and open-source. It can run in a laptop, and can process GBs of data fast.

It’s a file-based database which reminds me of SQLite except that DuckDB is mostly for analytical purposes (OLAP) rather than transactional (OLTP). It utilizes vectorized execution as opposed to tuple-at a time (row-based database), or column-at-a-time execution (column-based database). It sits somewhere between row-based and column-based in that the data is processed by columns but operates on batches of data at a time. Because of this, it is more memory efficient than column execution (e.g. pandas).

Here is Gabor Szarnyas’ presentation about Duckdb which talks in detail about Duckdb capabilities.

Duckdb isn’t a one-to-one comparison with Snowflake, though as it can only scale by memory, and is not distributed (nor with Apache Spark for that matter). It also runs locally. A counterpart to this is MotherDuck, which is a cloud data warehousing solution built on top of Duckdb (kind of like dbt Cloud to dbt core).

As a side note, I was delighted to learn that DuckDB has a SQL command to exclude columns! (Lol I know but you have no idea how cumbersome it is to write all 20+ columns only to exclude a few :p)

example from Duckdb Snippets

1

2

3

// This will select all information about ducks

// except their height and weight

SELECT * EXCLUDE (height, weight) FROM ducks;

Whereas the top Stack Overflow solution for this is

1

2

3

4

5

6

7

8

9

/* Get the data into a temp table */

SELECT * INTO #TempTable

FROM YourTable

/* Drop the columns that are not needed */

ALTER TABLE #TempTable

DROP COLUMN ColumnToDrop

/* Get results and drop temp table */

SELECT * FROM #TempTable

DROP TABLE #TempTable

I know explicitly writing column names is for “contracts” and exclude isn’t very production quality but it would be immensely useful in CTEs where the source columns have already been defined previously.

Okay end of side note :p

Do you think it’s normal to fan over a database? No pun intended. : ))

Three Laws of Robotics

I’m currently reading Isaac Asimov books. I’m on Book 2 (Foundation and Empire) of the Foundation Series. I kind of wanted to read the books before I watch Apple TV’s Foundation but I realized the series is totally different from the books. It was like preparing for an exam only to get entirely out-of-scope questions (which has happened too many times before :p)

Spoiler:

Anyway, the Three Laws of Robotics (aka Asimov’s Laws) didn’t originate in the Foundation Books (I just really want to talk about it :p ) but in one of his short stories in the I, Robot collection.

Spoiler:

The Three Laws of Robotics state:

- A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

- A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

The Three Laws of Robotics originally came from a science-fiction story in the 1940’s but it’s amazing how forward-looking it is. I’m not really into science-fiction books (I’d rather not mix the two :p) but I’m pulled into Asimov’s world nonetheless.

8: 2025-02-02 (Sun) - dbt

dbt - certifications and finished the course

I’ve finished the course today 🎉.

I’ve also moved the dbt notes into another post to keep the log tidier.

The last section is an interview about the official dbt certifications. I’m still not sure about doing the certification at this point (I kind of want to get more hands-on time with the tool first) but I like what the interviewee said about doing certifications - you learn a lot more about the tool, faster compared to just using it everyday. For me, I do get a lot of gains studying for certs. If I didn’t have those stock knowledge in the first place, it would have been a lot harder to think of other, better ways of approaching a problem. Mostly my qualms about doing certs is how expensive they are! :p

7: 2025-02-01 (Sat) - dbt

dbt - Advance Power user dbt core, introducing dbt to the company

Today isn’t as technical as the last couple of days. I’ve covered more features of the Power User for dbt core extension powered with AI, and tips on introducing dbt to the company.

The course is wrapping up as well, and I only have one full section left about certifications.

I learned about

- Advance Power User dbt core

- Introducing dbt to the company

6: 2025-01-31 (Fri) - dbt

dbt - variables, and dagster

Today I’ve covered dbt variables and orchestration with dagster. It was my first time setting up dagster. I actually liked dagster because the integration with dbt is tight. I was a bit overwhelmed though with all the coding at the backend to setup the orchestration. It might get easier if I take a deeper look at it. For now, it seems like a good tool to use with dbt.

5: 2025-01-30 (Thu) - dbt

dbt - great expectations, debugging, and logging

I’ve covered these topics today: dbt-great-expectations, debugging, and logging.

The dbt-great-expectations package, though not really a port of the Python package, contains many tests that are useful for checking the model. I’m glad ge was adapted into dbt as it’s also one of the popular data testing tool in Python.

4: 2025-01-29 (Wed) - dbt

dbt - snapshots, tests, macros, packages, docs, analyses, hooks, exposures.

Today was pretty full. I’ve covered dbt snapshots, tests, macros, third-party packages, documentation, analyses, hooks, and exposures. Not sure how I completed all of these today but these are pretty important components of dbt. I’m amazed about the documentation support in this tool, and snapshot is another feature in which I say “where has this been all my life?” To think that SCD is that straight-forward in dbt.

3: 2025-01-26 (Sun) - dbt

dbt - seeds, and source

Today, I’ve covered dbt seeds, and source. At first I thought - why did they need to relabel these CSV files and raw tables? The terminologies were a bit confusing but I guess I should just get used to these.

2: 2025-01-25 (Sat) - dbt, books, Python package, Copilot

dbt - models, and materialization

I’ve covered dbt models, and materialization. These are pretty core topics in dbt - because dbt is all about models!

Books for LLM, and Polars

Here are some materials I came upon today

LLM Engineer’s Handbook [Amazon] [Github]

- a resource when I get around to exploring LLMs

Polars Cookbook [Amazon]

pandera

panderais a Union.ai open source project that provides a flexible and expressive API for performing data validation on dataframe-like objects to make data processing pipelines more readable and robust. Dataframes contain information that pandera explicitly validates at runtime. - [Docs]

Here’s an example from the docs. It’s interesting because it seems like a lighter version of Great Expectations wherein the data can be further validated using ranges and other conditions. It’s powerful for dataframe validations.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

import pandas as pd

import pandera as pa

# data to validate

df = pd.DataFrame({

"column1": [1, 4, 0, 10, 9],

"column2": [-1.3, -1.4, -2.9, -10.1, -20.4],

"column3": ["value_1", "value_2", "value_3", "value_2", "value_1"],

})

# define schema

schema = pa.DataFrameSchema({

"column1": pa.Column(int, checks=pa.Check.le(10)),

"column2": pa.Column(float, checks=pa.Check.lt(-1.2)),

"column3": pa.Column(str, checks=[

pa.Check.str_startswith("value_"),

# define custom checks as functions that take a series as input and

# outputs a boolean or boolean Series

pa.Check(lambda s: s.str.split("_", expand=True).shape[1] == 2)

]),

})

validated_df = schema(df)

print(validated_df)

In Pydantic, something like this can also be used

1

2

3

4

5

6

7

8

9

from pydantic import BaseModel, conint, confloat

class Product(BaseModel):

quantity: conint(ge=1, le=100) # Validates that quantity is between 1 and 100

price: confloat(gt=0, le=1000) # Validates that price is greater than 0 and less than or equal to 1000

product = Product(quantity=10, price=500)

print(product)

Copilot installation, an update

The VS Code plugin that I was trying to install yesterday is working now and I am able to access chat on the side panel as well as see the prompts on screen. Not really sure what fixed it but it could be the reboot of my computer.

1: 2025-01-24 (Fri) - dbt, Copilot

dbt - introduction ,and setting up

I’m going through the dbt course in Udemy The Complete dbt (Data Build Tool) Bootcamp: Zero to Hero. I’ve setup a dbt project and created a Snowflake account.

It was between this and dbt Learn platform - I might go back to that for review later. Lecturers worked in Databricks and co-founded dbt Learn so I decided to do this course first - and in the process, subtract from the ever growing number of Udemy courses that I haven’t finished :p

Github Copilot

As an aside, I got distracted because the lecturer’s VS Code has Copilot enabled so I tried to setup mine. The free version is supposed to be one click and a Github authentication away but for some reason it’s buggy in my IDE. Leaving it alone for now.

0: 2024-11-16 (Sat) to 2025-01-08 (Wed) - DataExpert Data Engineering Bootcamp

DE Bootcamp

I finished Zach’s Free YouTube Data Engineering bootcamp (DataExport.io) which started November last year and will run until February 7 (the deadline was extended from last day of January).

The topics covered were:

- Dimensional Data Modeling

- Fact Data Modeling

- Apache Spark Fundamentals

- Applying Analytical Patterns

- Real-time pipelines with Flink and Kafka

- Data Visualization and Impact

- Data Pipeline Maintenance

- KPIs and Experimentation

- Data Quality Patterns

Zach Wilson did a good job of explaining the topics (I’m also very impressed with how well he can explain the labs while writing code without missing a beat). The Data Expert community was also an incredible lot, as some of the setup and homeworks were complicated without prior exposure.

It was a challenging 6 weeks of my life with lectures, labs, and homeworks so much so that there was some lingering emptiness when my schedule freed up as I finished the bootcamp. I’m glad I went through it and it’s a good jumping off point for my learning goal this year.

Sharing the link to my certification.